Hi, I’m Mark and welcome to my newsletter, Tech Accelerator, as we explore the latest in AI/ML 🤖, Cloud ☁️, SaaS 💻, and Platform & Product Engineering. 📚✨💫

Join our vibrant community of avid readers who receive TechAccelerator straight to their inbox every week. 📩 Best part? It's absolutely free! 🆓 ✨

Let's dive right into the most captivating tech news and updates!

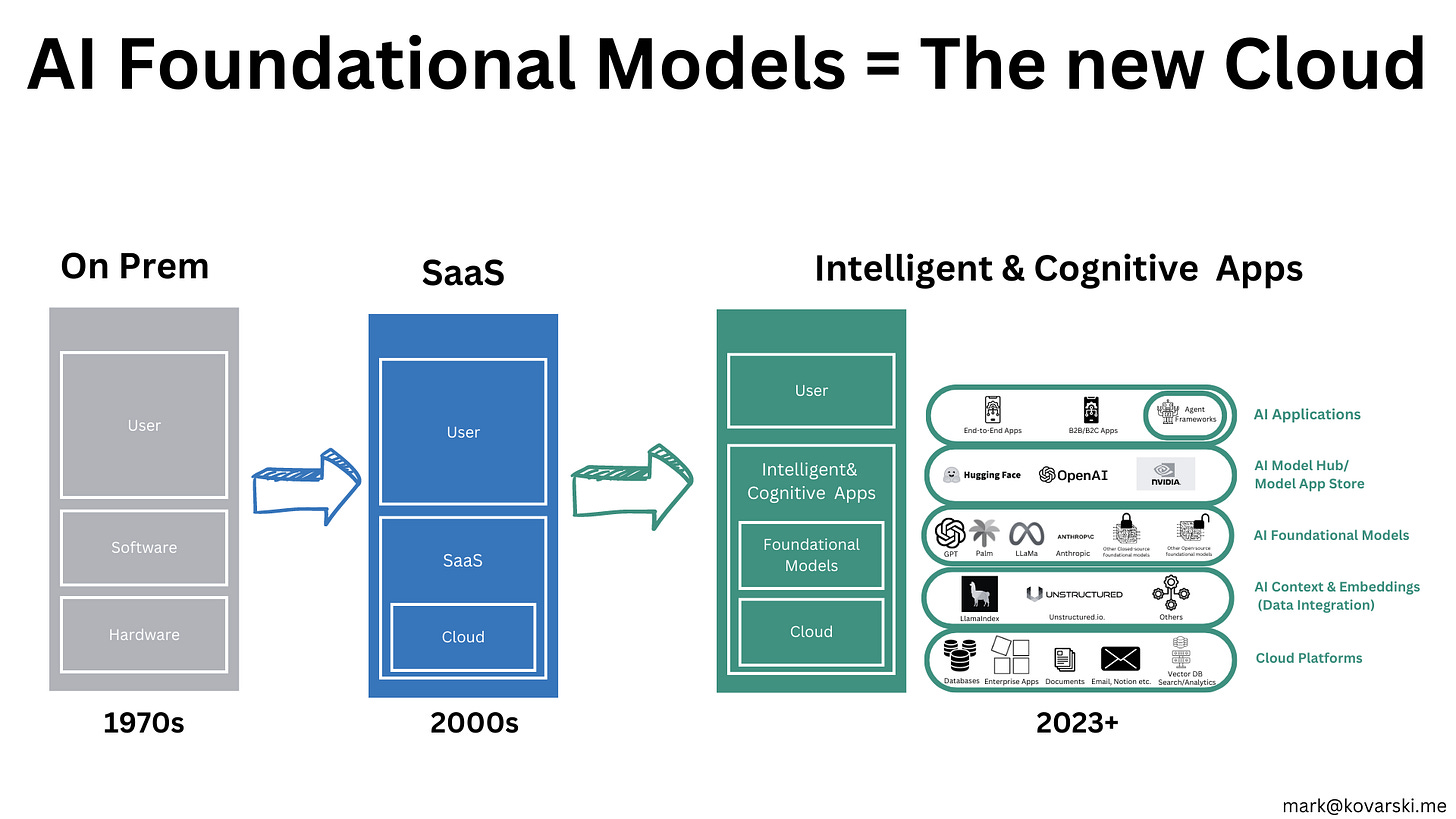

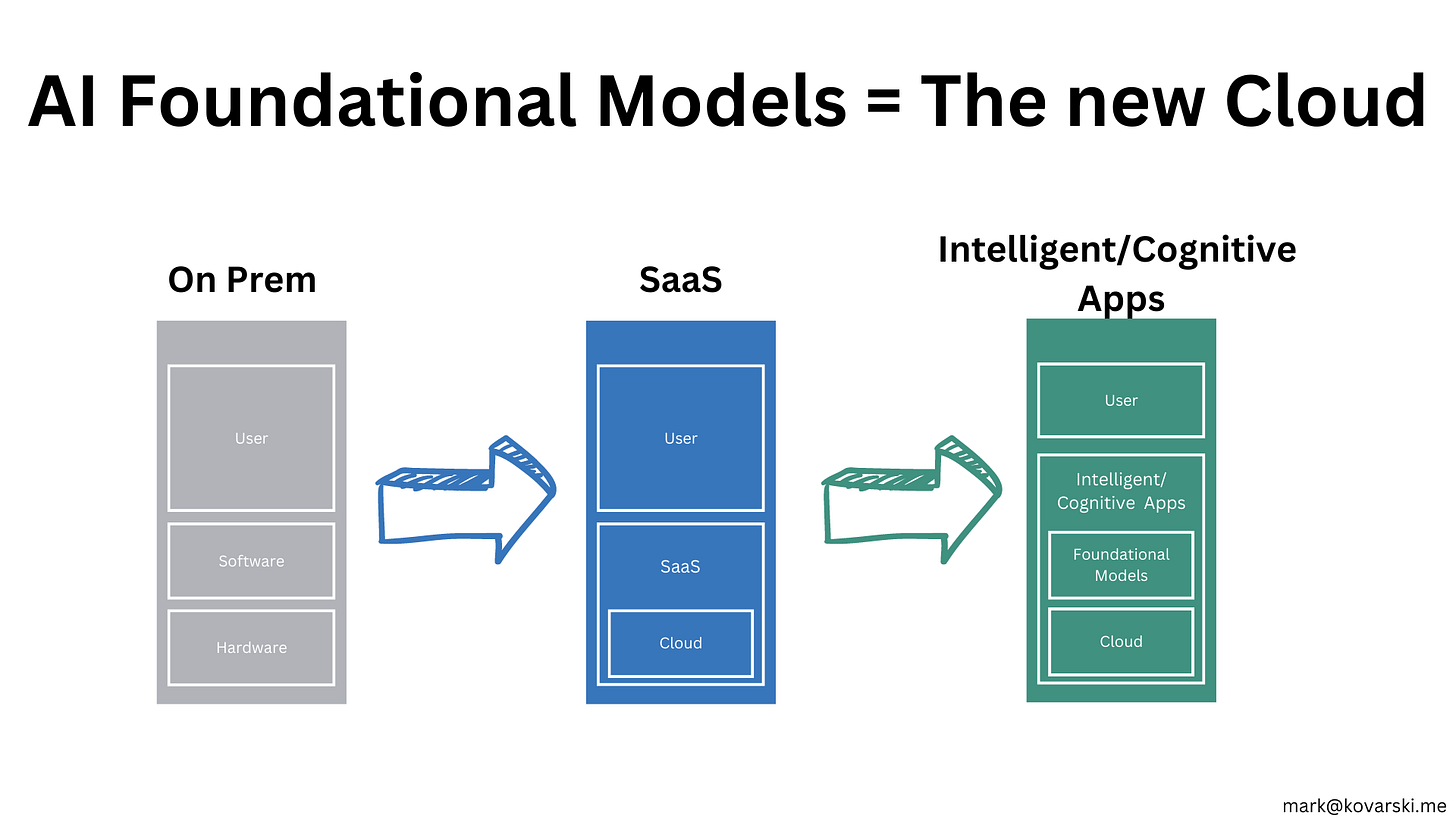

The advent of AI and LLM innovation has sparked a rapid transition from the traditional cloud and SaaS landscape to a new paradigm. However, the understanding of AI as a Service (AIaaS) and its underlying layers remains a challenge for the world at large. Enterprises are still grappling to comprehend the integration of AI into their systems and operations, seeking clarity amidst the transformative power of AI.

Major cloud service providers and third-party vendors accelerate their endeavors, seamlessly integrating AI's core elements—data, advanced algorithms, and AI-optimized hardware—into their cloud services. This rapid transition ushers in an unprecedented era that will be reshaping the very fabric of our society and every business in existence today.

While the transition is unfolding, the current market landscape reveals a fragmented ecosystem with providers offering specialized point solutions that do not cover all layers of the AI stack. Furthermore, several challenges have exists such as:

Limited Interoperability: 🤝

Current AI offerings are bundled packages with less interoperability between vendors, causing difficulties in integrating different solutions.

Vendor Lock-in and Proprietary Concerns: 🔒🏭

Bundled AI offerings raise concerns about vendor lock-in and proprietary limitations, reducing flexibility and hindering customization.

Restricted Extension of Functionality: 🚫🧩

The tightly coupled components of different layers limit the extension of new functionalities, impeding innovation and adaptation.

Lack of Developer Flexibility: 🚧💻

Developers face limitations in flexibility and adaptability when choosing suitable AI components for practical implementation.

Reliability Challenges: ❓🔌

Bundling multiple AI offerings into one package raises concerns about reliability, making it difficult to define transparent service-level agreements (SLAs).

Inhibited Open-Source Community Support: 🙅♂️🔓🌐

Bundled AI offerings are perceived as tightly controlled systems, inhibiting support from the open-source community and limiting collaboration.

Increased Lock-in and Migration Costs: 💰🚚🔀

Bundled AI offerings raise lock-in costs, leading to potential incompatibility issues and increased future migration costs among different vendors.

'

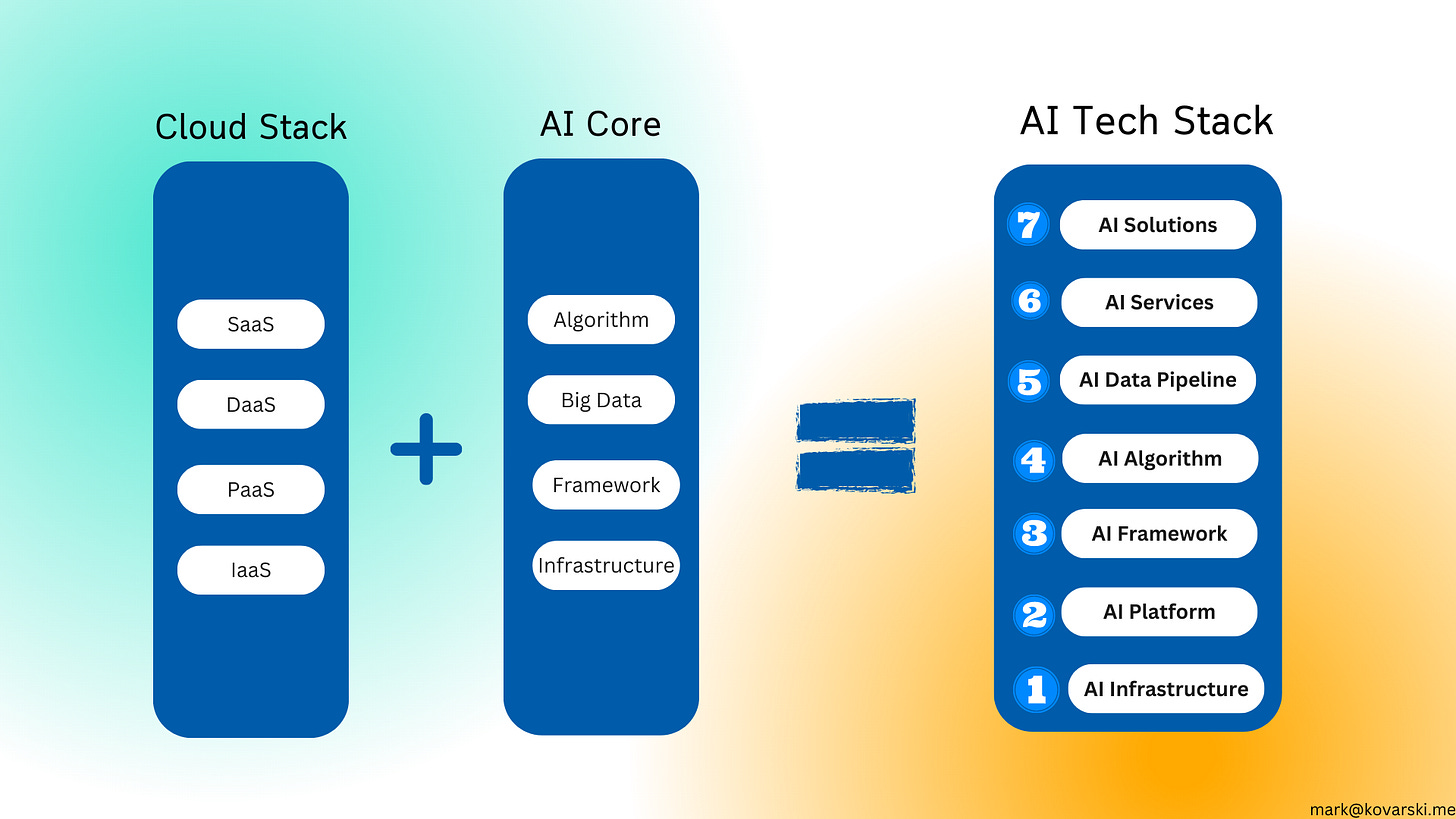

To address some of these challenges, a structured AI Tech Stack is shown below:

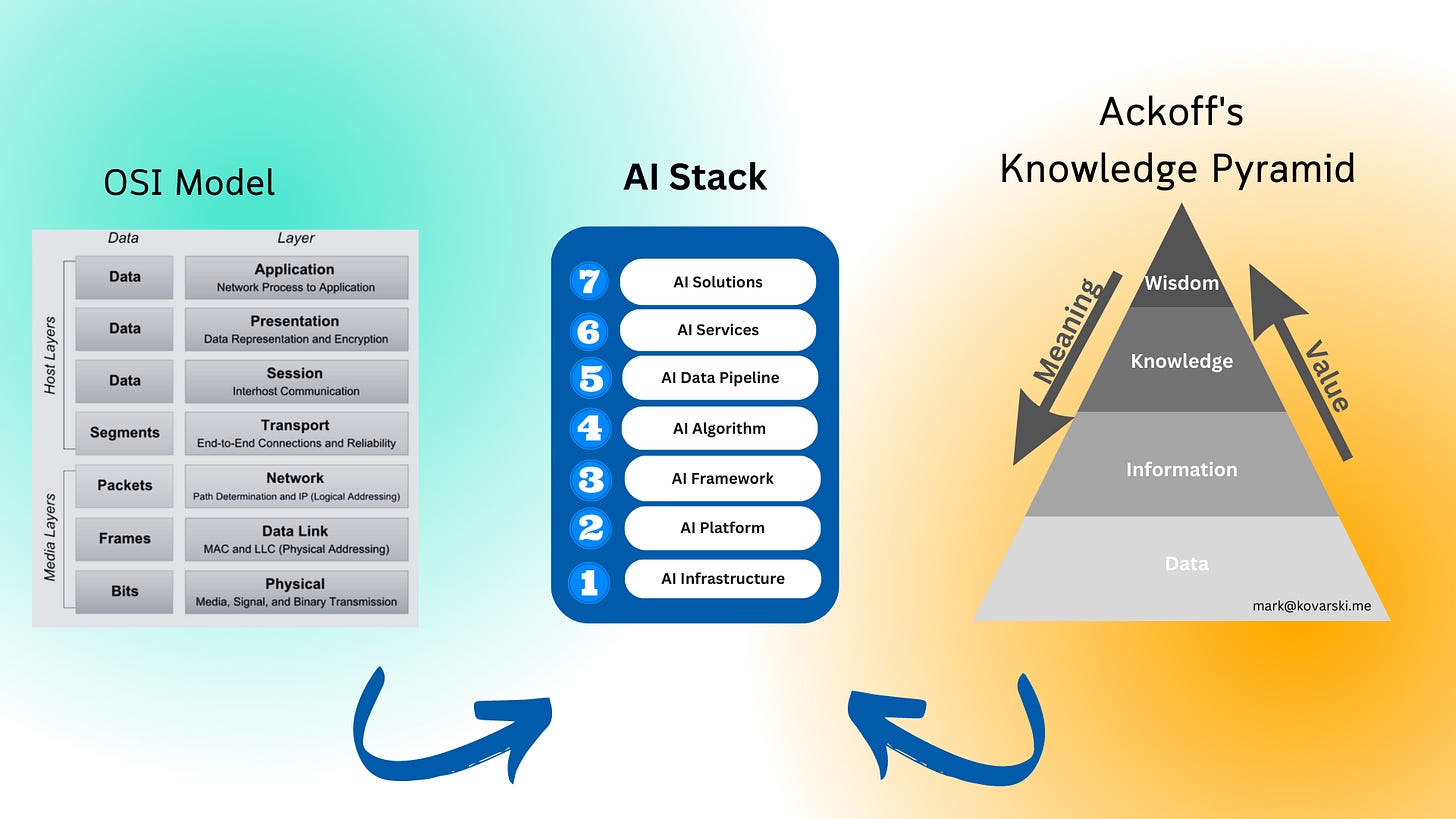

The amalgamation of Ackoff’s Knowledge Pyramid and the Open System Interconnection (OSI) presents the AI Stack:

Ackoff's Knowledge Pyramid provides a hierarchical structure for classifying information, comprising data, information, knowledge, understanding, and wisdom.

In parallel to Ackoff's pyramid, the AI Stack adopts the concept of the OSI reference model, a widely recognized framework for network communication. The AI Stack embraces a stack model, aligning with the layered structure of the OSI model. This allows for a clear delineation and understanding of the different functionalities and interactions within the AI system.

The fusion of Ackoff's Knowledge Pyramid and the OSI reference model into the AI Stack provides a framework for understanding the layers and functionalities of AI systems. This stack model highlights the hierarchical organization, the expansion of layers based on Ackoff's pyramid, and the clear delineation inspired by the OSI model.

The AI Stack

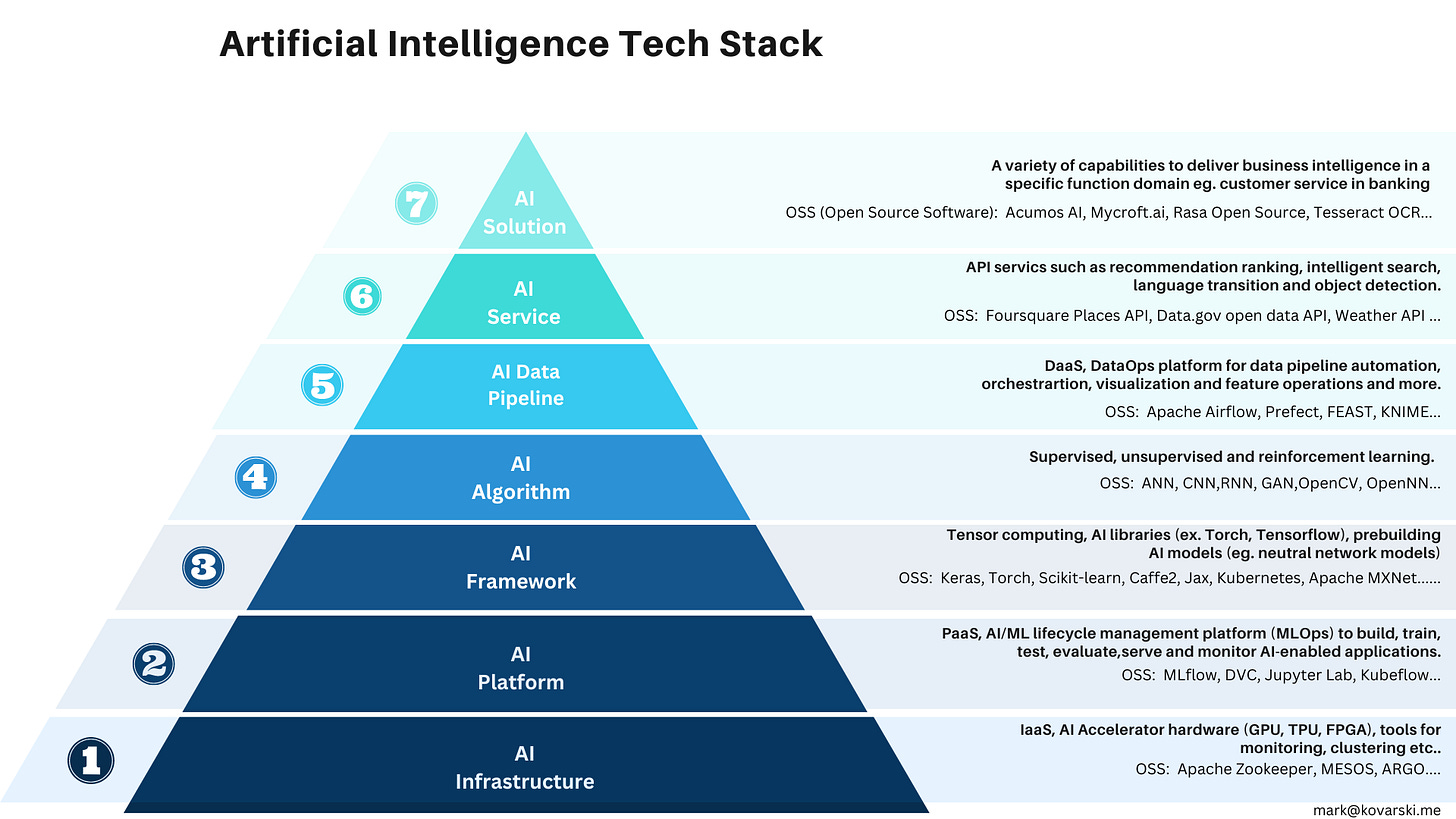

The AI tech-stack tackles long-standing challenges such as eliminating tightly coupled developments, eradicating the shackles of proprietary systems and vendor lock-in concerns. This approach also enables the definition of service level agreements (SLAs) and ensures fault-tolerant deployment, guaranteeing the reliability and performance of AI systems.

Here is a breakdown of the AI layers:

AI Infrastructure Layer ⚙️📡:

Integrates IaaS components and accelerators (e.g., GPUs, TPUs, FPGAs) to provide hardware capacity for AI applications.

Includes additional services for monitoring, clustering, and billing tools.

AI Platform Layer 🛠️🌐:

Sits atop the infrastructure layer and provides a unified user interface.

Facilitates collaboration across the ML lifecycle, including model building, training, evaluation, deployment, and monitoring.

Includes PaaS components (e.g., operating systems, databases) and MLOps capabilities.

AI Framework Layer 📚💻:

Hosts AI-specific software modules and tools for executing algorithms on the hardware.

Provides pre-built AI models, accelerator drivers, and support libraries.

Helps data scientists and developers build and deploy AI models faster.

AI Algorithm Layer 🧠📊:

Contains the set of AI training methods used by models for learning tasks.

Forms the core capability of AI models to process data and produce outputs.

AI Data Pipeline Layer 🛢️🔍:

Dedicated to data processing and management for AI models.

Integrates various data architectures and supports preprocessing and feature engineering.

Enables access to internal and external data sources.

AI Service Layer 📲🔧:

Offers ready-to-use, general-purpose APIs for AI-enabled services.

Transfers information or raw data to be executed with existing applications or enterprise systems.

Supports specific AI tasks in a general domain (e.g., image processing, NLP).

AI Solution Layer 🏢💡:

Delivers broader AI capabilities to address specific business problems in a particular industry or company domain.

Provides AI-enabled solutions for specific applications (e.g., intelligent customer solutions for banking).

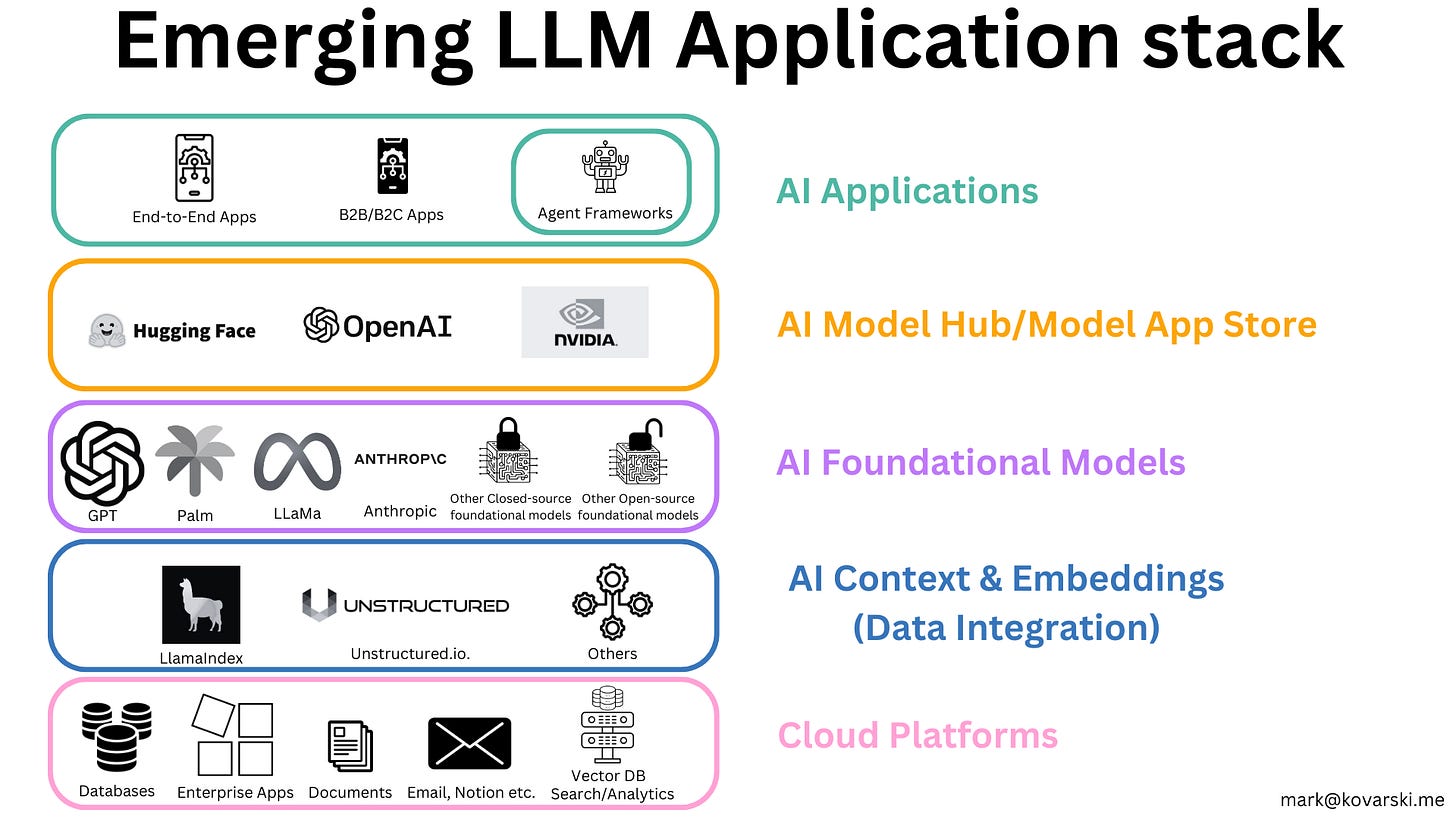

LLM-based applications are emerging as the dominant application paradigm for the future. Companies are integrating LLMs into their existing products, building new intelligent applications, and streamlining operations.

The concept of "Intelligent/Cognitive Apps" refers to a new class of application software that utilizes machine learning algorithms to gather information and make predictions about the world. These applications leverage advancements in cloud computing, connectivity, and data storage, and are expected to bring about significant changes in both the way software is built and how people interact with it.

From a software development perspective, the emergence of Intelligent/Cognitive Apps will require fundamental shifts in software architecture. Machine learning algorithms, which are central to these applications, require access to more compute processors, storage for vast amounts of sensor data, and new security protocols for inter-application communication. Public cloud infrastructure is seen as the most suitable platform to deliver this new architecture, making Intelligent/Cognitive Apps the next evolution of Software-as-a-Service (SaaS).

In terms of user experience, Intelligent/Cognitive Apps aim to go beyond traditional business software applications that primarily store and report on data. Instead of merely assisting users in their tasks, these apps are expected to intelligently work for users, leveraging what they have learned about the world to make autonomous decisions. The shift towards Intelligent/Cognitive Apps is deemed significant enough that modifying legacy applications to add intelligence may not be feasible for incumbent software vendors. The design and integration of intelligence should be integral to the application from the start.

Furthermore foundation models are gaining significant attention as companies explore their potential in developing Intelligent/Cognitive applications. Bridging the gap between these models and enterprise data requires a data framework, a platform that enables data ingestion, indexing, and retrieval for large language models (LLMs). The new LLM application stack, which includes foundation models, data frameworks, vector embeddings, and agent frameworks for app development:

The AI Context & Embeddings layer addresses the challenge of providing relevant information to language models (LLMs) in a scalable and efficient manner. LLMs often require additional context or data that is not part of their original training set to generate accurate answers. In-context learning approaches are employed, where a limited set of helpful information is appended to the prompt during the inference process, enabling LLMs to access the necessary context for accurate responses.

Language models represent text as vectors of numbers known as embeddings. These embeddings can be stored in specialized vector databases that are optimized for this type of data. Semantic search is a technique used to retrieve information stored as embeddings. It involves taking a search input vector and identifying the most similar vectors in the database based on their "meaning" rather than simple keyword matching. Vector databases and LLMs can effectively communicate with each other using the same language, enabling powerful in-context learning capabilities. Leveraging vector databases for in-context learning allows LLMs to expand their knowledge base and provide solutions for a wider range of queries.

By converting a knowledge base into embeddings stored in a vector database using an embedding API from OpenAI and others, developers can enable LLMs to process questions related to the knowledge base, search the vector database for similar matches, and formulate responses that incorporate the retrieved information.

Context windows in LLMs have various applications that companies are starting to leverage. Structured prompting solutions like Guardrails can enhance the reliability of LLM outputs. Prompt engineering and management solutions help improve the relevance of LLM responses. Additionally, storing frequently asked prompt-answer pairs enables lower-cost inference, as these common queries can be readily answered without extensive computational resources.

The AI landscape is evolving rapidly. A structured AI Tech Stack, provides a framework for reliable and performant AI systems. Moreover, the rise of LLM-based applications is transforming the application paradigm, offering immense potential for businesses. Entrepreneurs must seize this opportunity by focusing on accessible UI, fine-tuning models, and even building new models to drive innovation. Now is the time to embrace the power of AI and leverage foundation models and data frameworks to revolutionize your business. Take action and be at the forefront of this transformative wave.

Start building, start growing. 🌱📈

📑 NEWS & ANNOUNCEMENT

Marc Andreessen Just Published A New Post Titled: “Why AI Will Save the World”

The era of Artificial Intelligence is here, and boy are people freaking out.

Fortunately, I am here to bring the good news: AI will not destroy the world, and in fact may save it.

Machine Learning/Artificial Intelligence Testing for Production

As AI/ML models increasingly play a role in automation and decision-making, ensuring their reliability becomes crucial. This article introduces a new approach to testing AI/ML models for production, providing insights into the testing workflow, metrics, tools, and more. Read on to discover this innovative method and its significance in evaluating the trustworthiness of ML models used in decision-making processes.

How to train your own Large Language Models

The advent of Large Language Models (LLMs) has brought about a remarkable transformation in the AI domain. However, the majority of companies currently do not possess the capability to train these models on their own, relying instead on a limited number of major tech companies for access. Replit, on the other hand, has taken substantial strides in constructing the essential infrastructure for training their own LLMs from the ground up. This blog post delves into the details of Replit's journey, outlining the methodology they employed to accomplish this feat.

CompressGPT: Decrease Token Usage by ~70%

Enhancing the effective context window of GPT-* can be achieved by employing a technique wherein the LLM compresses a prompt and subsequently feeds it into another instance of the same model. However, this approach presents certain significant challenges. In this article, the author elucidates an efficient approach to tackle these issues and provides insights on how to resolve them effectively.

FriendlyCore: A novel differentially private aggregation framework

Differential privacy (DP) ML algorithms safeguard user data by constraining the impact of individual data points on aggregated output, supported by a mathematical guarantee. Nevertheless, DP algorithms often exhibit reduced accuracy compared to their non-private counterparts. Explore this article to discover strategies for overcoming this challenge and enhancing the accuracy of DP algorithms.

Distributed Hyperparameter Tuning in Vertex AI Pipeline

Vertex AI pipelines provide a seamless approach to implementing end-to-end ML workflows with minimal effort. This insightful article introduces a fresh method for enabling distributed hyperparameter tuning within GCP's Vertex AI pipeline. Expand your knowledge by delving into the details!

Andrew NG Releases 3 New Generative AI course

The new course includes:

- Building Systems with the ChatGPT API

- LangChain for LLM Application Development

- How Diffusion Models Work

Google AI Launches a Free Learning Path on Generative AI

The new course will guide you through a curated collection of content on Generative AI products and technologies, from the fundamentals of Large Language Models to how to create and deploy generative AI solutions on Google Cloud.

Top ML Papers 🏆

Let’s Verify Step by Step, achieves state-of-the-art mathematical problem solving by rewarding each correct step of reasoning in a chain-of-thought instead of rewarding the final answer; the model solves 78% of problems from a representative subset of the MATH test set. (paper)

No Positional Encodings, shows that explicit position embeddings are not essential for decoder-only Transformers; shows that other positional encoding methods like ALiBi and Rotary are not well suited for length generalization. (paper)

BiomedGPT, a unified biomedical generative pretrained transformer model for vision, language, and multimodal tasks. Achieves state-of-the-art performance across 5 distinct tasks with 20 public datasets spanning over 15 unique biomedical modalities. (paper)

QLoRA, an effective fine-tuning technique that significantly reduces memory usage, enabling the fine-tuning of a 65B parameter model on a single 48GB GPU, while maintaining optimal 16-bit fine-tuning performance. (paper)

LIMA, a cutting-edge 65B parameter LLaMa model that has been meticulously fine-tuned using a curated collection of 1000 prompts and responses. Unlike using RLHF, LIMA showcases exceptional generalization to previously unseen tasks that were not included in the training data. In 43% of cases, LIMA generates responses that are equivalent or preferred over GPT-4, and it surpasses Bard in terms of response quality even more frequently. (paper)

Voyager, a remarkable lifelong learning agent in Minecraft empowered by LLM (Large Language Models). Voyager possesses the incredible ability to autonomously navigate through virtual worlds, acquire new skills, and make groundbreaking discoveries without the need for human intervention. (paper)

Gorilla, an exceptional finetuned model based on LLaMA (Large Language Model Architecture) that outperforms GPT-4 in writing API calls. This remarkable capability empowers LLMs to effectively identify the appropriate API, greatly enhancing their ability to interact with external tools and successfully accomplish specific tasks. Experience the remarkable potential of Gorilla in revolutionizing the seamless integration of LLMs with external systems and tools. (paper)

"The False Promise of Imitating Proprietary LLMs," the author offers a thorough critique of models that undergo finetuning based on the outputs of more powerful models. The article challenges the notion that model imitation is a valid approach and argues that the more impactful strategy for enhancing open source models lies in the development of superior base models. Delve into this insightful analysis that questions the effectiveness of imitating proprietary LLMs and explores alternative avenues for improving the landscape of open source models. (paper)

"Model Evaluation for Extreme Risks" sheds light on the crucial role of thorough model evaluation in mitigating extreme risks and making responsible choices regarding model training, deployment, and security. By emphasizing the significance of robust evaluation methodologies, the article highlights the need to go beyond standard evaluation practices to ensure models are well-equipped to handle extreme scenarios. Explore this insightful piece that delves into the importance of comprehensive model evaluation for enabling informed decision-making in the face of high-stakes risks. (paper)

Reinventing RNNs for the Transformer Era - introduces a novel approach that merges the efficient parallelizable training of Transformers with the streamlined inference capabilities of RNNs. By leveraging the strengths of both architectures, the proposed method achieves comparable performance to similarly sized Transformers. This innovative approach represents a significant step in adapting traditional RNNs to thrive in the era of Transformers. (paper)

Prompt Engineering Fun

Editorial Style Photo, Eye Level, Modern, Living Room, Fireplace, Leather and Wood, Built-in Shelves, Neutral with pops of blue, West Elm, Natural Light, New York City, Afternoon, Cozy, Art Deco:: Additive::0 --ar 16:9

Editorial Style photo, Low Angle, Mid-Century, Lounge, Armchair, Leather, Wood, Textiles, Wall Decor, Rug Detail, Earthy Tones, Knoll, Floor Lamp, Table Lamp, Palm Springs Modern Home, Afternoon, Relaxing:: Additive::0 --ar 16:9

Editorial Style Photo, Off-Center, Mid-Century Modern, Living Room, Eames Lounge Chair, Wood, Leather, Steel, Graphic Wall Art, Bold Colors, Geometric Shapes, Design Within Reach, Track Lighting, Condo, Morning, Playful, Mid-Century Modern, Iconic, 4k --ar 16:9

New Tools

Cody AI - AI Programming Assistant (The AI that knows your entire codebase)

Psychic - Web Service Automation (An open-source integration platform for unstructured data)

Quivr - Knowledge Management (Your second brain)

RasaGPT - Large Language Model Tools (The headless LLM chatbot platform)

Flowise - Large Language Model Tools (Drag and drop UI to build your customized LLM flow using LangchainJS)

LocalAI - Large Language Model Tools (Local models on CPU with OpenAI compatible API)

PentestGPT - Penetration Testing Tools (A GPT-empowered penetration testing tool)

Wishing you an incredible week filled with endless possibilities and success! ✨

If you've found this newsletter to be valuable, don't keep it to yourself - share the awesomeness with a friend! 😎 And if you haven't already, why not subscribe and stay in the loop for more exciting updates? Together, let's embark on this journey of knowledge and discovery.