The cost of training LLM models

How much does it cost to train LLM models?

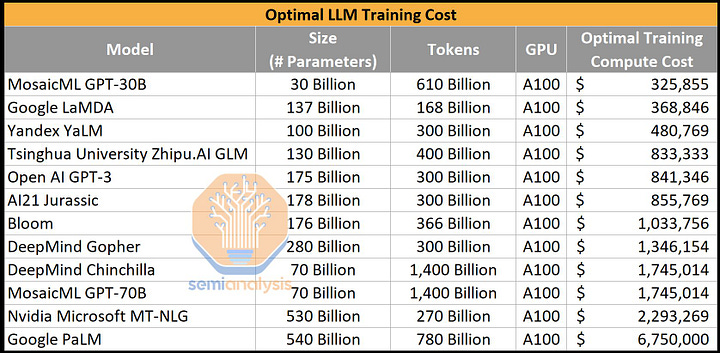

The cost of training LLM models depends on factors such as the size of the model, training data, hardware, and time. Training large models with high-quality data can be expensive and require significant computational resources. To make LLM training more cost-effective, strategies such as reducing the model size, using pre-trained models, data augmentation, early stopping, and adjusting the learning rate schedule can be applied. These strategies can help reduce the amount of training time and computational resources required while still achieving good performance on specific tasks.

Estimating ChatGPT costs is a tricky proposition due to several unknown variables. Early indicators are that ChatGPT costs $694,444 per day to operate in compute hardware costs. OpenAI requires ~3,617 HGX A100 servers (28,936 GPUs) to serve Chat GPT. Estimated the cost per query to be 0.36 cents.

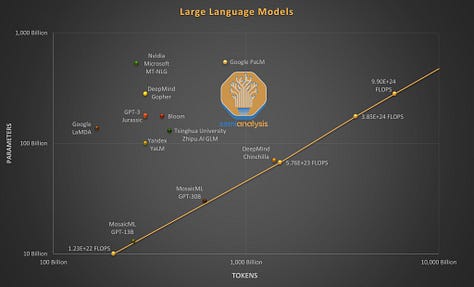

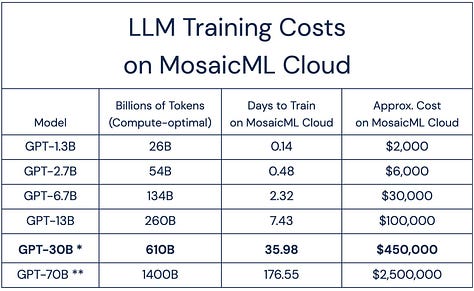

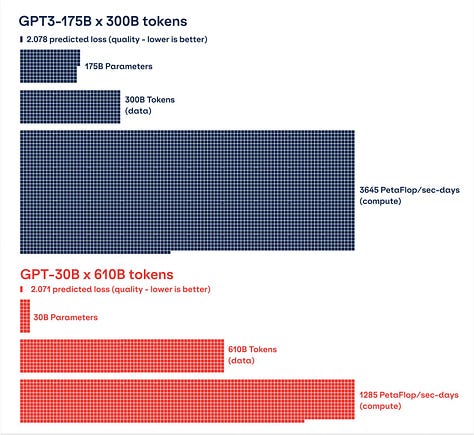

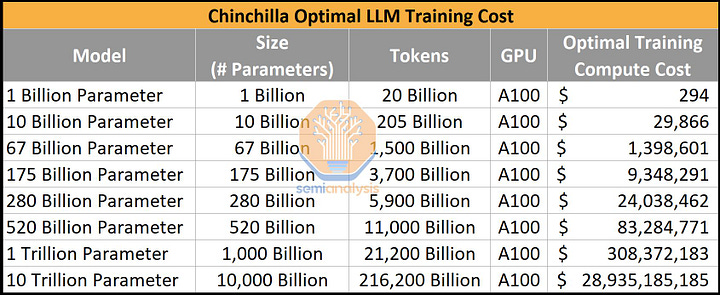

Training a 60-70B LLM would cost around $2.5M on MosaicML cloud. Given recent advances like Chinchilla and AlexaLM-20B, both of which outperform GPT-3 by using * fewer params and more data * (GPT-30B, 610B token) recipe that should match the quality of the original GPT-3. And the new costs is ~$450k. MosaicML already claims to be able to train GPT-3 quality models for less than $500,000 and Chinchilla size and quality models for ~$2,500,000.